Stumbling blocks and annoyances

NGINX and certbot’s default permissions

EFF’s certbot writes certificates to /etc/letsencrypt/live/<certificate hostname>/<files>.pem, and sets the permissions to only allow root to read the files. This makes sense from the perspective of a system where processes that need certificates will probably spawn as root, read the certificates to memory, and then spawn child processes that can use the memory-cached certificates.

Unfortunately, NGINX doesn’t do this when you use variables in the ssl_certificate(_key) configuration; nginx -t will pass with a configuration like

listen 443 ssl http2;

listen [::]:443 ssl http2;

ssl_certificate /etc/letsencrypt/live/$ssl_server_name/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/$ssl_server_name/privkey.pem;

access_log /var/log/nginx/$ssl_server_name.access.log;The problem only becomes apparent when trying to access that virtual host; NGINX attempts to read the file with the unprivileged process that’s handling the virtual host request, and the result in the error log is

[error] 26787#26787: *1 cannot load certificate "/etc/letsencrypt/live/$ssl_server_name/fullchain.pem": BIO_new_file() failed (SSL: error:8000000D:system library::Permission denied:calling fopen(/etc/letsencrypt/live/$ssl_server_name/fullchain.pem, r) error:10080002:BIO routines::system lib) while SSL handshaking, client: 10.68.0.1, server: 0.0.0.0:443I liked the variable setup, because it meant I could just import a snippet into every configuration, reducing the amount of near-identical text in my configuration files. However, rather than muck around with things like changing the permissions of /etc/letsencrypt/archive from 0700 to something that grants group or world read, I opted to just go back to having every virtual host specify the SSL certificates explicitly.

runcmd is not great with lots of entries

This one is also self-inflicted; just because runcmd can run commands, it doesn’t mean it’s a good idea to put a lot of commands in there. My runcmd section for the www container was getting rather long with all of the repetitive certbot calls, one per virtual host. The chosen solution was to write a bash script (with associative arrays, because why not) that mapped domains to the relevant credentials file, and then executed the same certbot command in a loop, substituting the domain and the credentials file appropriately. This reduced the www container from ~10 certbot calls to 1 shell script call; still takes as long because it’s done serially, but the YAML file is a bit easier to read. Hypothetically it can be done in parallel, but given this only happens at first container boot, it’ll be fine for the first boot in production to take a few minutes.

An alternative solution would have been to build out a certs container, let it do all of the fetching and renewals, and use deployment hooks to copy the certificates over to the other containers that need them. In fact, with LXD’s shared storage volume capabilities, it might even be possible to have a certs container running certbot (or any other ACME client) and a shared mount point across all containers. Not a great idea from an isolation perspective, but easier than having SSH installed in each container and doing key management.

Postfixadmin schema changes

The existing server has an older version of Postfixadmin, and the passwords are not crypted (an artifact of how I was doing authentication previously where Courier’s authdaemon and Postfix couldn’t agree on an encryption algorithm). The rebuild has moved the authentication layer into Dovecot running in the mail container, which removes the encryption conflict.

I settled on using mycli‘s TSV output format to dump out the mailbox and alias tables, and applied a little bit of awk to reformat the tab-separated file into postfixadmin-cli incantations.

awk -F'\t' '{printf("postfixadmin-cli alias add %s --goto %s --active %d\n", $1, $2, $4)}' aliasLet’s Encrypt staging certificates versus Firefox

Quite correctly, Firefox does not trust the Let’s Encrypt staging certificates. This made it a little more difficult to test all of the websites (to make sure Roundcube worked and so on) before deploying to production. Trusting these certificates in the browser I use for every-day browsing is a terrible idea.

One approach to support testing is to

- Set up a new Firefox profile using the –ProfileManager argument

- Name that profile “Do Not Use For Internet” to remind myself

- Start a Firefox instance using that profile

git clone https://github.com/letsencrypt/website.git --sparse --dept 1 --filter=blob:nonegit sparse-checkout set static/certs/staging- Import the root .pem files into Firefox’s certificate store, trusting for web

Now that profile will happily trust the staging certificates in my test environment, and I can move on to testing things like Roundcube.

Roundcube (Debian/Ubuntu packaging)

Out of the package, Roundcube is configured with a random DB password in /etc/roundcube/debian-db.php. So the first action after finding this out was to update my grants.sql file with a create database if not exists, and a grant statement for the roundcube user. Try to load Roundcube’s UI, and it tells me that it cannot connect to the database.

This is a lie. It can connect just fine. What it can’t do is find the database tables that would normally be set up by the installer process if it was installed from a tarball. Confirmation of this fact can be found in /var/log/roundcube/errors.log; entries about being unable to write to the session table. This is fixed by (in this case) piping the SQL DDL from /usr/share/dbconfig-common/data/roundcube/install/mysql to the roundcube database (or there’s /usr/share/roundcube/SQL/mysql.initial.sql – diff says the files are identical).

With that fixed, the Roundcube UI shows up, and a login can be attempted.

Roundcube versus TLS failures

With the UI for Roundcube working, it’s login time. All of my mailbox configs have been loaded into the MariaDB table, and I’ve confirmed that Dovecot can find the accounts. Out of the box, Roundcube on Ubuntu has no imap_auth_type specified in /etc/roundcube/config.inc.php (which appears to be copied from /usr/share/roundcube/config.inc.php.sample), so it defaults to PLAIN. This matches up to Dovecot’s auth_mechanisms allowing plain. However, I was constantly seeing “tried to use disallowed plaintext auth” in the Dovecot logs, and Roundcube would not log in.

Set Dovecot’s login_trusted_networks to the bridge subnet IPv4, and everything works. This setting disables checks of the disable_plaintext_auth setting according to the docblock. This would be acceptable since the bridge network will never be connected to the public network in a way that allows sniffing of the bridge traffic. However, I dug some more, because I like to find root causes.

The root cause is that I forgot to set ‘tls://‘ for $config['default_host'] in Roundcube, so Dovecot was correctly rejecting an attempt to use plaintext authentication (which lets it compare the unencrypted string against the salted SHA512-CRYPT passwords in the database) because it wasn’t encrypted. With ‘tls://‘ prefixed on the IMAP host, the connection broke in another way. Dovecot recorded “SSL_accept() failed: error:0A000418:SSL routines::tlsv1 alert unknown ca: SSL alert number 48“, and Roundcube recorded “error:0A000086:SSL routines::certificate verify failed“.

I’m fairly sure this comes from using the Let’s Encrypt staging certificates, since the roots for those certificates are not in the CA certs file. The resolution was to reconfigure the IMAP connection settings in Roundcube (I won’t build the prod server with this configuration)

$config['imap_conn_options'] = array(

'ssl' => array('verify_peer' => false, 'verify_peer_name' => false),

'tls' => array('verify_peer' => false, 'verify_peer_name' => false)

);The same configuration tweak is needed for the smtp_conn_options if the SMTP host is prefixed with ‘tls://‘, as well as the managesieve plugin.

MariaDB versus systemd-resolved

To do virtual host testing, I’ve been editing /etc/hosts on the host machine (ie, my desktop computer) and adding the LXD-assigned IP of the container with the virtual host name. This works just fine if /etc/resolv.conf on the host machine is pointing to the configuration retrieved via DHCP. If it’s configured to point at the stub-resolv.conf, then things go a bit pear-shaped.

/run/systemd/resolve/stub-resolv.conf on the host points at a local nameserver instance that’s available on loopback via 127.0.0.53. That stub resolver uses the data from DHCP to find upstream resolvers for DNS queries. Per the systemd-resolved documentation, it uses /etc/hosts to do some name resolution as well.

The mappings defined in

/etc/hostsare resolved to their configured addresses and back, but they will not affect lookups for non-address types (like MX). Support for/etc/hostsmay be disabled withReadEtcHosts=no, see resolved.conf(5).This resolver reads and caches

/etc/hostsinternally. (In other words,nss-resolvereplacesnss-filesin addition tonss-dns). Entries in/etc/hostshave highest priority.

Hypothesis

LXD containers communicate with a dnsmasq instance that LXD owns. That dnsmasq instance appears to use the host system’s /etc/resolv.conf by default. Thus, even though the LXD dnsmasq has a full forward and backward mapping for the internal IPs, the RR queries being done by MariaDB to authenticate the connection are getting resolved by the host looking data up in /etc/hosts through the host’s systemd-resolved stub resolver.

Troubleshooting

The failure mode is that MariaDB (via the system resolver) evaluates 10.68.0.50 as <aliasname>, not as www.lxd.

First attempt, reconfigure the host’s resolver

- change

/etc/systemd/resolved.confon the host and setReadEtcHosts=no, systemctl restart systemd-resolved,- load Roundcube’s UI,

- failure.

Second attempt, check if the read of /etc/hosts is still happening

- keep first attempt changes,

- change

/etc/hostson the host to assign<aliasname2>to10.68.0.50, - load Roundcube’s UI,

- failure.

Third attempt, container in-memory cache?

- keep first and second attempt changes,

- reboot the db container in case it’s an in-memory cache on the container,

- load Roundcube’s UI,

- failure.

Try a different diagnostic; use resolvectl query on two containers and on the host

root@db:~# resolvectl query 10.68.0.50

10.68.0.50: <aliasname> -- link: eth0

root@db:~# resolvectl query 10.68.0.163

10.68.0.163: mx.lxd -- link: eth0

# Try a different container

root@mail:~# resolvectl query 10.68.0.50

10.68.0.50: <aliasname> -- link: eth0

# Try the host

user@host$ resolvectl query 10.68.0.50

10.68.0.50: <aliasname> -- link: lxdbr0

This means it’s not a cache in the container; two different containers have the same response, as does the host machine. The host machine response is a nice clue here, it’s saying the resolution came from lxdbr0. The resolver configuration (resolvectl status) on the host is

Global

Protocols: -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

DNS Domain: local

Link 4 (lxdbr0)

Current Scopes: DNS

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 10.68.0.1

DNS Servers: 10.68.0.1

DNS Domain: ~lxdAttempt four, see if it’s a cache in LXD’s dnsmasq

- keep all the previous changes, including the change in

/etc/hostsfor the alias kill -HUP <ip of dnsmasq on host>resolvectl query 10.68.0.50- failure – now

<aliasname2>is mapped to10.68.0.50

Ok, so that confirms that LXD’s dnsmasq is where things are going sideways. LXD allows the dnsmasq configuration to be set in the configuration of the bridge. Since I only need to change one option, it can be assigned directly to the key; if there were multiple options, a literal style string would be needed.

Attempt five, change the LXD bridge dnsmasq configuration via lxc network edit lxdbr0

config:

ipv4.address: 10.68.0.1/24

ipv4.dhcp.expiry: 1d

ipv4.nat: "true"

raw.dnsmasq: no-hostsuser@host$ resolvectl query 10.68.0.50

10.68.0.50: www.lxd -- link: lxdbr0

root@db:~# resolvectl query 10.68.0.50

10.68.0.50: www.lxd -- link: eth0Success! Refresh Roundcube’s UI. Still getting a connection error. Bounce MariaDB, because it probably has a cache for speed. Refresh Roundcube’s UI. Success!

Nextcloud in a snap

I decided to take a look at the Nextcloud snap, as an alternative way to install Nextcloud. What I was expecting was something more akin to an apt package – all the Nextcloud bits. What I got was a complete container of some sort, with redis, mysql, php-fpm, and apache2. Way more than I want, and way more opaque than I want. Promptly uninstalled – I’ll do it from tarball.

1100 ? Ss 0:00 snapfuse /var/lib/snapd/snaps/core18_2538.snap /snap/core18/2538 -o ro,nodev,allow_other,suid

1284 ? Ss 0:04 snapfuse /var/lib/snapd/snaps/nextcloud_31222.snap /snap/nextcloud/31222 -o ro,nodev,allow_other,suid

1662 ? Ss 0:00 /bin/sh -e /snap/nextcloud/31222/bin/start_mysql

1677 ? Ss 0:00 /bin/sh /snap/nextcloud/31222/bin/renew-certs

1684 ? Ss 0:00 /bin/sh -e /snap/nextcloud/31222/bin/nextcloud-fixer

1696 ? Ss 0:00 /bin/sh /snap/nextcloud/31222/bin/start-redis-server

1710 ? Ss 0:00 /bin/sh /snap/nextcloud/31222/bin/delay-on-failure mdns-publisher nextcloud

1732 ? Ss 0:00 /bin/sh /snap/nextcloud/31222/bin/nextcloud-cron

1744 ? Ss 0:00 /bin/sh /snap/nextcloud/31222/bin/start-php-fpm

1931 ? Sl 0:00 mdns-publisher nextcloud

2041 ? Sl 0:00 redis-server unixsocket:/tmp/sockets/redis.sock

2319 ? S 0:00 sleep 1d

2391 ? S 0:00 /bin/sh /snap/nextcloud/31222/bin/mysqld_safe --defaults-file=/snap/nextcloud/31222/my.cnf --datadir=/var/snap/nextcloud/31222/

2626 ? Sl 0:00 /snap/nextcloud/31222/bin/mysqld --defaults-file=/snap/nextcloud/31222/my.cnf --basedir=/snap/nextcloud/31222 --datadir=/var/sn

2809 ? Ss 0:00 php-fpm: master process (/snap/nextcloud/31222/config/php/php-fpm.conf)

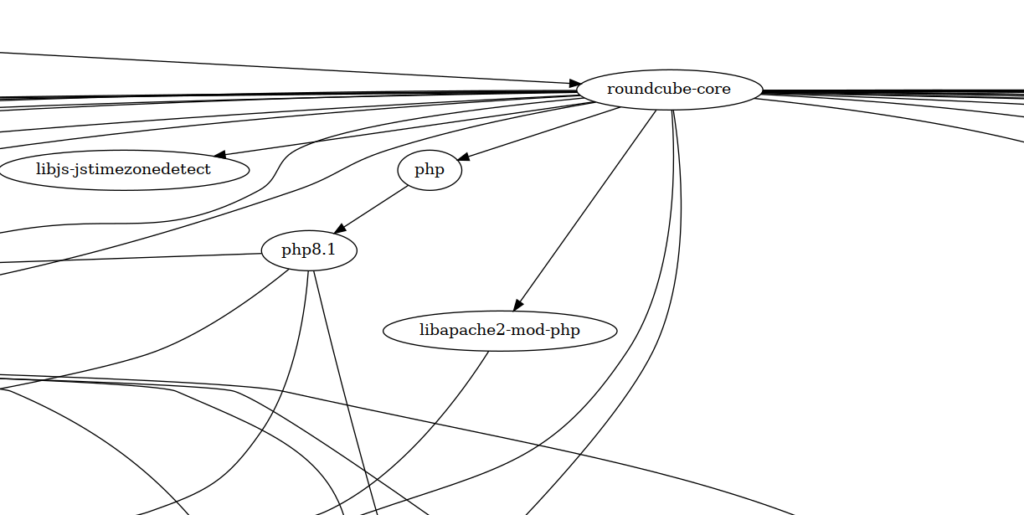

apt package dependencies

The www container will be running things like Roundcube and Postfixadmin. Both of these packages appear to have dependencies on mysql-server, and if that’s taken away from them, postgresql-14 gets installed instead. I don’t need any databases set up on the www container at all, so a bit of tweaking was needed for the package list provided to cloud-init. The documentation doesn’t say so explicitly, but if you’re using a apt-based system, you can use apt’s nomenclature of appending a minus sign at the end of the package name, and it’ll get filtered out from the list of things to install – even if it’s a dependency of another package.

packages:

- apache2-

- dovecot-core-

- git

- libapache2-mod-php-

- mariadb-client

- mariadb-server-

- mariadb-server-10.6-

- nginxSince I explicitly want nginx, and roundcube transitively depends on some Apache packages, I flag apache2 for filtering by listing it as apache2-